Jaideep Saraswat, Nikhil Mall

Artificial Intelligence (AI) has existed for decades, but two landmark events in the past decade have redefined its trajectory. The first was Google’s 2017 research paper ‘Attention is all you need,’ which introduced the transformer architecture. This replaced recurrence with self-attention and allowed models to capture long-range dependencies efficiently. This shift enabled the creation and scaling of Large Language Models (LLMs). The second milestone came in 2023 when OpenAI released ChatGPT to the public, offering access to conversational superintelligence. Together, these breakthroughs made AI one of the most transformative technologies of our time.

AI today is not limited to researchers or enthusiasts. It is fundamentally reshaping industries. From healthcare and finance to energy and entertainment, LLMs are driving efficiency, improving decision-making, and creating new opportunities. By combining human expertise with AI’s ability to analyse and generate information at scale, we are witnessing smarter, faster, and more personalised systems. However, this growing adoption also raises an important question: is AI sustainable?

The Growing Environmental Footprint of AI

As AI usage expands, so does its appetite for energy. Data centers currently account for around 1.5 percent of global electricity consumption, and this figure is projected to rise to nearly double by 2030. The rapid build-up of massive AI data centers housing thousands of high-performance GPUs has accelerated this trend. To put this into perspective, a single NVIDIA H100 GPU consumes roughly 3,740 kWh of electricity annually, which is about 2.7 times the per capita annual electricity usage in India. A 100-megawatt data center can consume as much electricity as 300,000 Indian households*. Nearly all of this electrical energy converts into heat, which in turn demands significant cooling infrastructure that often depends on large volumes of water. This shows that the environmental impact of AI extends well beyond electricity consumption, encompassing significant challenges related to water usage.

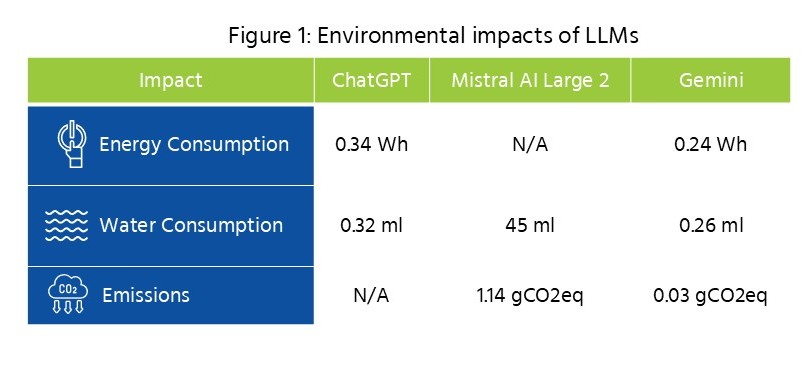

Beyond energy, the broader environmental footprint of AI is even more complex. The energy, emissions, and water consumption associated with leading LLMs reflect only the marginal cost of inference, i.e., the energy used to generate responses once a model is trained (Figure 1**). A complete life-cycle analysis would also need to account for data aggregation and storage, model training and retraining, data center construction, hardware manufacturing, and eventual disposal. Despite growing awareness, there is still no universally accepted methodology for quantifying AI’s environmental impact. Establishing such a framework should be a priority, as it would guide developers, policymakers, and consumers in making informed decisions about the true costs of AI.

Insights from Google’s Research

Google’s 2025 paper on the environmental impact of AI represents a step forward in this direction. It advocates for a full stack measurement approach that accounts for every stage of AI operation, from chip-level power consumption to data centre cooling. It also emphasises carbon-aware computing, where computation is shifted to times and locations where renewable energy is most available. However, even this research acknowledges significant challenges. The energy consumption of AI varies widely depending on the model and the type of query. A simple text generation task may consume far less power than an agentic query that combines web search, reasoning, and synthesis. Newer techniques, such as energy-based transformers, aim to improve prediction quality and interpretability but may also increase computational requirements. True sustainability will require advancements in both hardware efficiency and software optimisation.

The Awareness Gap

While major technology firms explore such solutions, a large part of the sustainability challenge lies with users. Most people remain unaware of the ecological cost of their AI usage. For instance, Vibe Coders who use tools like Cursor or Windsurf to build minimum viable products (MVPs) often rely on large, resource-intensive models for simple tasks that smaller, more efficient models could handle. Just as governments require energy ratings on household appliances, developers (of these tools) should be mandated to set smaller, energy-efficient models as the default option. Furthermore, individual users would benefit from transparent disclosures showing the environmental cost of each query, including estimated energy consumption, carbon emissions, and water usage. Such transparency could encourage more responsible usage and reduce unnecessary computational demand.

Building a Sustainable AI Future

To minimize AI’s environmental footprint while sustaining innovation, progress must occur across multiple fronts. Model optimisation should focus on improving knowledge synthesis, efficiency, and transfer learning rather than simply increasing size. Regulatory frameworks can play a role by introducing energy-efficiency standards similar to those in the appliance sector. Finally, industry-wide collaboration is essential. The AI Action Summit held in Paris in 2025 has already initiated efforts to align AI development, spanning hardware and software, with global environmental goals through Coalition of Sustainable AI.

Striking the Right Balance

AI and LLMs are now integral to everyday life. Balancing innovation with transparency, efficiency with accessibility, and progress with planetary well-being is the only path forward. The future of AI should not just be intelligent, it should also be sustainable.

* Vasudha Foundation Analysis

** The differences reflect the variations in methodology adopted by each developer. Refer to the links to learn more about their methodologies.